FAQs: Difference between revisions

ChinweokeO (talk | contribs) No edit summary |

|||

| (57 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

'''Go back to [[Main Page|BioCompute Objects]].''' | '''Go back to [[Main Page|BioCompute Objects]].''' | ||

== General == | == General == | ||

1. | 1. '''How can I build a BioCompute Object (BCO)?''' | ||

You have several options for building a BCO. You can use the standalone "builder" tool available [https://biocomputeobject.org/builder here]. Alternatively, if you're using a platform that supports BioCompute, you can utilize tools built into that platform such as DNAnexus/precisionFDA, Galaxy, or Seven Bridges/Cancer Genomics Cloud. You may also choose to build an output into your workflow as a JSON file conforming to the standard. | You have several options for building a BCO. You can use the standalone "builder" tool available [https://biocomputeobject.org/builder here]. Alternatively, if you're using a platform that supports BioCompute, you can utilize tools built into that platform such as DNAnexus/precisionFDA, Galaxy, or Seven Bridges/Cancer Genomics Cloud. You may also choose to build an output into your workflow as a JSON file conforming to the standard. | ||

2. | 2. '''What are the minimum requirements for conformance with the BioCompute standard?''' | ||

The minimum requirements include inputs, outputs, data transformation steps, environment details, individuals involved in pipeline development or execution, and a plain text description of the pipeline's objectives. The standard allows for much greater detail if needed, and is extensible to include substantially more. The standard is organized into 8 domains, 5 of which are required and 3 are optional. | The minimum requirements include inputs, outputs, data transformation steps, environment details, individuals involved in pipeline development or execution, and a plain text description of the pipeline's objectives. The standard allows for much greater detail if needed, and is extensible to include substantially more. The standard is organized into 8 domains, 5 of which are required and 3 are optional. | ||

3. | 3. '''How can I ensure my submission validates against the BioCompute schema?''' | ||

Your submission should validate against the schema, which you can reference directly at the top level domain provided [https://opensource.ieee.org/2791-object/ieee-2791-schema/-/raw/master/2791object.json?ref_type=heads here]. | Your submission should validate against the schema, which you can reference directly at the top level domain provided [https://opensource.ieee.org/2791-object/ieee-2791-schema/-/raw/master/2791object.json?ref_type=heads here]. | ||

4. | 4. '''Where can I find more information about the BioCompute standard and its organization?''' The official repository for the standard is open access and can be found [https://opensource.ieee.org/2791-object/ieee-2791-schema/ here]. | ||

5. | 5. '''Can you provide an example of a completed BioCompute Object (BCO)?''' | ||

Yes, you can view an example of a completed BCO [https://biocomputeobject.org/viewer?https://biocomputeobject.org/BCO_000452/1.0 here]. You can explore both table and raw JSON views. | Yes, you can view an example of a completed BCO [https://biocomputeobject.org/viewer?https://biocomputeobject.org/BCO_000452/1.0 here]. You can explore both table and raw JSON views. | ||

6 | 6. '''Where would information regarding data sources and standard operating procedures be? Which specific domain?''' | ||

Data sources should be recorded as described by the input_subdomain in the “[[Iodomain|io_domain]]” and the input_list in the “[[Description-domain|description_domain]]”. Standard operating procedures and any other information about data transformations SHOULD be elaborated upon in the “[[Usability-domain|usability_domain]]”. | Data sources should be recorded as described by the input_subdomain in the “[[Iodomain|io_domain]]” and the input_list in the “[[Description-domain|description_domain]]”. Standard operating procedures and any other information about data transformations SHOULD be elaborated upon in the “[[Usability-domain|usability_domain]]”. | ||

7. '''How can a third-party access URIs in a BCO?''' | |||

URIs can be directed to local paths. In these cases, the necessary files are shared with the parties that will require access. If it is a link to a public domain, it will be easily accessible for all. | URIs can be directed to local paths. In these cases, the necessary files are shared with the parties that will require access. If it is a link to a public domain, it will be easily accessible for all. | ||

8. '''What is a SHA1 Checksum?''' | |||

A SHA-1 checksum, or Secure Hash Algorithm 1 checksum, is a fixed-size output (160 bits) generated from input data to uniquely identify and verify the integrity of files or documents. In BioCompute Objects (BCOs), it serves to ensure the unchanged state of computational workflows by comparing calculated and original checksums. This allows for accuracy in viewing and downloading BCOs. | A SHA-1 checksum, or Secure Hash Algorithm 1 checksum, is a fixed-size output (160 bits) generated from input data to uniquely identify and verify the integrity of files or documents. In BioCompute Objects (BCOs), it serves to ensure the unchanged state of computational workflows by comparing calculated and original checksums. This allows for accuracy in viewing and downloading BCOs. | ||

9. '''How do I sign in with an ORCID/What is an ORCID?''' | |||

[https://orcid.org/ ORCID] stands for Open Researcher and Contributor ID, and is a free, unique identifier assigned to researchers, providing a standardized way to link researchers to their scholarly activities. To sign in with your ORCID, create an account at: https://orcid.org/. Using the credentials associated with your ORCID account you can log in to view and edit BCOs. | [https://orcid.org/ ORCID] stands for Open Researcher and Contributor ID, and is a free, unique identifier assigned to researchers, providing a standardized way to link researchers to their scholarly activities. To sign in with your ORCID, create an account at: https://orcid.org/. Using the credentials associated with your ORCID account you can log in to view and edit BCOs. | ||

== Pipeline | == Pipeline Questions == | ||

1. '''Do pipeline steps have to represent sequentially run steps? How can you represent steps also run in parallel?''' | 1. '''Do pipeline steps have to represent sequentially run steps? How can you represent steps also run in parallel?''' | ||

The standard does not mandate any particular numbering schema, but it’s best practice to pick the most logically intuitive numbering system. For example, a user may run a somatic SNV profiling step at the same time as a structural CNV analysis. So if in the example I mentioned, the alignment is step #2, then you might (arbitrarily) call the SNV profiling step #3, and the CNV analysis step #4. The fact that they pull from the output of the same step (#2) can easily be detected programmatically and represented in whatever way is suitable (e.g. graphically). | The standard does not mandate any particular numbering schema, but it’s best practice to pick the most logically intuitive numbering system. For example, a user may run a somatic SNV profiling step at the same time as a structural CNV analysis. So if in the example I mentioned, the alignment is step #2, then you might (arbitrarily) call the SNV profiling step #3, and the CNV analysis step #4. The fact that they pull from the output of the same step (#2) can easily be detected programmatically and represented in whatever way is suitable (e.g. graphically). | ||

2. '''What is the nf-core plugin and how can I test it?''' | |||

The nf-core plugin, designed to facilitate Nextflow workflows, is now available for testing. To enable the BCO (BioCompute Object) format within the plugin, follow these instructions: | |||

* Ensure you have the latest version of the plugin installed. | |||

* Add the following code snippets to your Nextflow configuration file: | |||

plugins { | |||

id 'nf-prov' | |||

} | |||

prov.enabled = true | |||

prov { | |||

formats { | |||

bco { | |||

file = 'bco.json' | |||

overwrite = true | |||

} | |||

} | |||

} | |||

These settings will enable the BCO format and specify the output file as "bco.json". Ensure you include these snippets in your configuration file to activate the BCO format. | |||

For any questions related to Nextflow environment, please ask [https://github.com/nextflow-io/nf-prov/issues here] | |||

== Inputs and Outputs == | == Inputs and Outputs == | ||

1. '''What is the relationship and difference between input_list in description_domain and | 1. '''What is the relationship and difference between input_list in description_domain and I/O Domain? Does input list in I/O domain contain all the input files of all the pipeline steps?''' | ||

Yes. The Input Domain is for global inputs. The input_list/output_list in the pipeline_steps is specific to individual steps and is used to trace data flow if granular detail is needed. If not needed, a user can simply look at the IO domain for the overall view of the pipeline inputs. | Yes. The Input Domain is for global inputs. The input_list/output_list in the pipeline_steps is specific to individual steps and is used to trace data flow if granular detail is needed. If not needed, a user can simply look at the IO domain for the overall view of the pipeline inputs. | ||

2. '''What is the relationship and difference between output_list in description_domain and | 2. '''What is the relationship and difference between output_list in description_domain and I/O Domain? Does output list in I/O domain contain all the output files of all the pipeline steps?''' | ||

The Output Domain is for global outputs. The input_list/output_list in the pipeline_steps is specific to individual steps and is used to trace data flow if granular detail is needed. If not needed, a user can simply look at the IO domain for the overall view of the pipeline outputs. | The Output Domain is for global outputs. The input_list/output_list in the pipeline_steps is specific to individual steps and is used to trace data flow if granular detail is needed. If not needed, a user can simply look at the IO domain for the overall view of the pipeline outputs. | ||

| Line 93: | Line 116: | ||

Correct, Execution Domain is for anything related to the environment in which the pipeline was executed, and the Description Domain is specific to the software in those steps. So if I’ve written a shell script to run the pipeline, and in one step it includes myScript.py to comb through results and pick out elements of interest, myScript.py might be an Execution Domain prerequisite, and any packages or dependencies called from within the script are Description Domain level prerequisites. Alternatively, if I’m using the HIVE platform, any libraries needed to run HIVE are Execution Domain level. | Correct, Execution Domain is for anything related to the environment in which the pipeline was executed, and the Description Domain is specific to the software in those steps. So if I’ve written a shell script to run the pipeline, and in one step it includes myScript.py to comb through results and pick out elements of interest, myScript.py might be an Execution Domain prerequisite, and any packages or dependencies called from within the script are Description Domain level prerequisites. Alternatively, if I’m using the HIVE platform, any libraries needed to run HIVE are Execution Domain level. | ||

== Knowledgebases == | == BCO Scoring System == | ||

1. '''How is the score calculated?''' | |||

The score is computed based on a few key factors: | |||

* '''Usability Domain''': The length of the ''usability_domain'' field contributed to the base score | |||

* '''Field Length Modifier''': A multiplier (1.2) is applied to the base score to account for field length | |||

* '''Error Domain''': If the ''error_domain'' exist and is inserted correctly, 5 points are added | |||

* '''Parametric Objects''': A multiplier (1.1) is applied to the score for each parametric object in the ''parametric_objects'' list | |||

* '''Reviewer Objects''': Up to 5 points are added, one for each correct ''reviewer_object'' | |||

2. '''What happens if the ''usability_domain'' is missing?''' | |||

If the ''usability_domain'' or other required fields are missing the BCO score is immediately set to 0, and the function returns the BCO instance without further calculations. | |||

3. '''What is the purpose of the ''bco_score'' function''' | |||

The ''bco_score'' function calculates and assigns a unique score to each BioCompute Object (BCO) based on specific criteria in its contents. The score is influenced by the presence of characteristics of certain fields like the ''usabiliy_domain'', ''error_domain'', ''parametric_objects'', and ''reviewer_objects''. | |||

4. '''What is the purpose of the ''field_length_modifier'' and ''parametric_object_multiplier''?''' | |||

* ''field_length_modifier'' (1.2): This modifier adjusts the base score according to the length of the usability_domain field. | |||

* ''parametric_object_multiplier'' (1.1): This multiplier increases the score based on the number of parametric objects present in the BCO, reflecting the complexity of the object. | |||

5. '''What is the expected output of the bco_score function?''' | |||

The ''bco_score'' function modifies the BCO instance by assigning a ''score'' attribute based on the criteria mentioned above, the updated BCO instance, with the score added, is then returned following the saving of the BCO draft. | |||

6. '''How does the reviewer count affect the score?''' | |||

For each ''reviewer_object'' present in the BCO (up to maximum of 5 reviewers), the score increases by 1 point. This incentivizes the inclusion of peer review and validation within the object. | |||

7. '''What is the significance of ''entAliases'' in the ''convert_to_ldh'' function?''' | |||

The ''entAliases'' field is a list that stores multiple identifiers for the BioCompute Object. These include the ''object_id,'' its full URL (entIri), and its entity type (entType), ensuring that the object can be referenced in different contexts. | |||

8. '''How and Where does the score appear on the BCO Builder?''' | |||

The score is calculated by the ''bco_score'' function and is displayed within the BioCompute Object (BCO) metadata section of the BCO Builder interface, following the saving of a BCO draft. | |||

== BCO for Knowledgebases == | |||

1. '''Can BCOs be used for curating databases?''' | 1. '''Can BCOs be used for curating databases?''' | ||

| Line 110: | Line 174: | ||

The '''SAVE''' only saves the entry on the website but it's not saving to the server. For a new draft, after editing, go to '''Tools''', first select a BCODB, then click on '''GET PREFIXES''' to choose a prefix, and lastly, click on '''SAVE PREFIX'''. For an existing draft, to save properly, click on '''SAVE''' first and then under '''Tools''', select '''UPDATE DRAFT'''. | The '''SAVE''' only saves the entry on the website but it's not saving to the server. For a new draft, after editing, go to '''Tools''', first select a BCODB, then click on '''GET PREFIXES''' to choose a prefix, and lastly, click on '''SAVE PREFIX'''. For an existing draft, to save properly, click on '''SAVE''' first and then under '''Tools''', select '''UPDATE DRAFT'''. | ||

2. '''What are the minimal requirements for validation and publishing with the BioCompute Objects (BCO) standard?''' | |||

A submission simply needs to validate against the [https://opensource.ieee.org/2791-object/ieee-2791-schema/-/raw/master/2791object.json?ref_type=heads schema]. The minimal requirements include inputs, outputs, data transformation steps, environment details, person(s) who wrote or executed the pipeline, and a plain text description of the pipeline and its objectives. | |||

== BCO Validation and Error messages == | == BCO Validation and Error messages == | ||

| Line 126: | Line 194: | ||

You may not be able to see this difference in the '''COLOR-CODED''' view, and will have to look in the '''TREE VIEW JSON''' or '''RAW JSON VIEW'''. | You may not be able to see this difference in the '''COLOR-CODED''' view, and will have to look in the '''TREE VIEW JSON''' or '''RAW JSON VIEW'''. | ||

== Publications == | == Submitting BCOs to FDA == | ||

=== Submission Process and Requirements === | |||

# '''How should I submit a BCO with a regulatory submission?''' Both JSON (the original format of BCO) and plain text (".txt") documents are accepted by the FDA. BCO files can be included as a JSON file under Module 5.3.5.4 and submitted to the FDA as supporting documents in the Electronic Common Technical Document (eCTD) for the submission of bioinformatics workflow data to both CDER (Center for Drug Evaluation and Research) and CBER (Center for Biologics Evaluation and Research) for regulatory reviews. Human Foods Program (HFP; formerly known as the Center for Food Safety and Nutrition, or CFSAN) also accepts BCOs. Please check with your reviewer or review division for logistical details to submit a BCO. BCOs can contain links to files that are submitted via hard drive. File sharing via the FDA-HIVE portal is not currently available. | |||

# '''What is the minimum content requirement for a Sample eCTD Submission to CDER?''' Module 1 and Module 5 (Module 5.3.5.4 especially where the BCO is located) are required. A cover letter (states the purpose of the submission and the intentional submission center) and FDA Form (1571 for IND and 356h for NDA/BLA/ANDA), and BCO file. | |||

# '''Can I submit a BCO without data files?''' Yes, but if the sponsor is submitting a BCO without the data they should seek agreement with the review division prior to submission. | |||

# '''Which center should the eCTD (containing BCO file(s)) be submitted to?''' Both CDER and CBER centers accept eCTD submissions and they both have the ability to review the submission files if requested. | |||

# '''How to submit eCTD?''' eCTD (which contains BCO file) should be submitted via Test Electronic Submission Gateway (ESG) to both CBER and CDER. In order for both centers to receive the submission, sponsors would need to make a submission to each center via the ESG Gateway. | |||

# '''Where should BCO files be placed within the eCTD?''' We recommend including BCO files as part of a study, referenced under a Study Tagging File, under Module 5.3.5.4 (Other Study Reports). | |||

=== Dataset and File Submission Details === | |||

# '''How to include or indicate dataset information in the BCO and/or ESG submission?''' There are a few options to include the dataset information in the BCO or ESG submission: (Note: it is mandatory to include dataset information in the Cover Letter, however, if you would like to add more clarity, you may choose from the option 2-4 listed below) | |||

## Include in the dataset information in the Cover Letter: State how the datasets are submitted (via ESG or hard drive), the tracking number, estimated delivery date. This option is mandatory for BCO submission to the FDA to help reviewers to track down related datasets. | |||

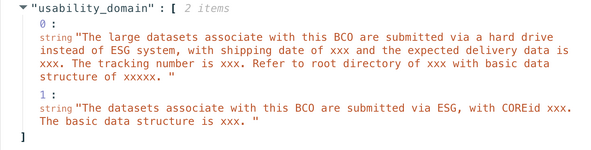

##Globally, suggest to include datasets information in the Usability Domain and refer to the root of the hard drive, see example below:[[File:Screenshot 2024-11-11 at 16.14.20.png|center|thumb|591x591px]] | |||

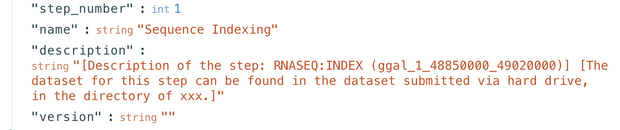

##If you prefer to mention the file structure and names in each step, then the datasets information can be included in description domain, see example below:[[File:Screenshot 2024-11-11 at 16.14.05.png|center|thumb|620x620px]] | |||

##If you would like to use the file structure and names from an existing schema, you may enter the schema URL in the extension domain, using the Figure 1 from this [https://journals.plos.org/ploscompbiol/article?id=10.1371/journal.pcbi.1000424 paper] as an example, the JSON schema defines the file structure and names | |||

"$schema": "http://json-schema.org/draft-07/schema#", | |||

"$id": "http://json-schema.org/draft-07/schema#", | |||

"title": "Computational Biology Project Structure", | |||

"type": "object", | |||

"properties": { | |||

"level 1": { | |||

"type": "object", | |||

"properties": { | |||

"msms": { | |||

"type": "string", | |||

"description": "Main directory for project data and scripts." | |||

}, | |||

"level 2": { | |||

"type": "object", | |||

"properties": { | |||

"doc": { | |||

"type": "object", | |||

"description": "Project documentation", | |||

"properties": { | |||

"paper": { | |||

"type": "string", | |||

"description": "Documents related to the project." | |||

} | |||

} | |||

}, | |||

"data": { | |||

"type": "string", | |||

"description": "Directory for data files." | |||

}, | |||

"src": { | |||

"type": "string", | |||

"description": "Source code directory." | |||

}, | |||

"bin": { | |||

"type": "string", | |||

"description": "Executable files." | |||

}, | |||

"results": { | |||

"type": "object", | |||

"description": "Results directory with analysis outputs.", | |||

"properties": { | |||

"2009_01_15": { | |||

"type": "string", | |||

"description": "Data from analysis on 2009-01-15." | |||

}, | |||

"2009_01_23": { | |||

"type": "string", | |||

"description": "Data from analysis on 2009-01-23." | |||

} | |||

} | |||

} | |||

} | |||

} | |||

} | |||

}, | |||

"level 3": { | |||

"type": "object", | |||

"properties": { | |||

"2009_01_14": { | |||

"type": "object", | |||

"description": "Initial analysis on 2009-01-14.", | |||

"properties": { | |||

"yeast": { | |||

"type": "string", | |||

"description": "Yeast dataset." | |||

}, | |||

"worm": { | |||

"type": "string", | |||

"description": "Worm dataset." | |||

} | |||

} | |||

}, | |||

"2009_01_15": { | |||

"type": "object", | |||

"description": "Follow-up analysis and summary on 2009-01-15.", | |||

"properties": { | |||

"split1": { | |||

"type": "string", | |||

"description": "First data split." | |||

}, | |||

"split2": { | |||

"type": "string", | |||

"description": "Second data split." | |||

}, | |||

"split3": { | |||

"type": "string", | |||

"description": "Third data split." | |||

} | |||

} | |||

} | |||

} | |||

} | |||

} | |||

# '''How to submit large I/O files to the FDA?''' Please refer to the [https://www.fda.gov/industry/create-esg-account/frequently-asked-questions#_Q19 FDA site] for the definition of large I/O files. Large I/O files need to be sent on a hard drive to CBER, which FDA-HIVE is nested under CBER. All data will be uploaded/downloaded to HIVE. CDER reviewers will use the CBER HIVE to receive and review this type of data. Please use the following address for the hard drive: <u>U.S. Food and Drug Administration</u> <u>Center for Biologics Evaluation and Research</u> <u>Document Control Center</u> <u>10903 New Hampshire Avenue</u> <u>WO71, G112</u> <u>Silver Spring, MD 20993-0002.</u> Also, please add information of the dataset submission plan or details in the Cover Letter so that the reviewer knows it is coming and when to expect it. | |||

#'''Are there any specific notes or memos that should be included with the hard drive?''' Yes, please ensure that the application number is attached to the hard drive for easier processing and identification. In addition, provide the shipping company name, transit number and expected delivery date when available to DCC (DCCEDR@fda.hhs.gov). | |||

# '''Can I submit BCOs on a portable hard drive if requested by the FDA?''' BCOs can also be stored on a portable<ins> </ins>hard drive and referenced in the eCTD. This allows for easy access and verification of the BCO files during the review process'''.''' The hard drive submitted to HIVE (CBER), CDER would also have access to the data. | |||

# '''How much data needs to be submitted?''' There is no minimum requirement; it depends on the goal of the submission and the scope of the submission. | |||

# '''Is the software used required for submission?''' Software used is not required to be submitted. However, some divisions would want software details; this depends very much on where the submission goes. | |||

=== Tools and Technical Details === | |||

# '''What is Electronic Submissions Gateway (ESG)?''' The Electronic Submissions Gateway (ESG) is the FDA's primary way to receive electronic regulatory submissions. | |||

# '''What materials are required to register for an ESG account?''' FDA ESG provides two methods, WebTrader and AS2 for making submissions to the FDA. WebTrader is a web-based user interface to send documents and receive receipts and acknowledgments from the FDA with minimal technical expertise. To set up WebTrader, several preparatory steps are needed. First, prepare an electronic Letter of Non-Repudiation Agreement; Second, generate or obtain a free personal digital certificate. These two documents are needed during the account registration step. Other optional preparatory steps include preparing a guidance-compliant test submission and a load test submission. For more details, review the formal FDA documentation [https://www.fda.gov/industry/create-esg-account/setting-webtrader-account-checklist here]. To register the WebTrader account, go to the ESG Account Management Portal at '''''[https://esgportal.fda.gov/ https://esgportal.fd]<nowiki/>[https://esgportal.fda.gov/ a.go][https://esgportal.fda.gov/ v/].''''' Then select "New Account Registration" and follow the '''''<nowiki/>'''''prompts. Please refer to the [https://www.fda.gov/industry/about-esg/esg-account-management-portal-user-guide ESG Account Management Portal User Guide] for instructions. After account registration, users need to set up the local machine. Download the required WebTrader Client Installer (Note: only compatible with Windows machines). AS2 (System-to-System) allows industry partners to have the option to access the Gateway via system-to-system communication. It provides an automated connection to the FDA for submissions, receipts, and acknowledgments, generally used by sponsors that have a high volume of submissions. However, this system requires AS2-compliant software and technical expertise. For more information regarding AS2, refer to the [https://www.fda.gov/industry/create-esg-account/setting-as2-account-checklist Setting up an AS2 Account Checklist]. | |||

# '''How much data can ESG (Electronic Submission Gateway) handle?''' The maximum file size for a single file (non-folder) is 100 GB of uncompressed data. The maximum file size for a multi-file (folder) is 100 GB of uncompressed data that contains single files (non-folder) no larger than 6 GB of uncompressed data. ESG recommends that you send an email to [[Mailto:ESGHelpDesk@fda.hhs.gov|ESGHelpDesk@fda.hhs.gov]] for all submissions over 10 GB of uncompressed data. The FDA recommends that submissions greater than 15 GB and less than 25 GB in size be sent overnight starting at 5PM EST to ensure receipt by the targeted FDA Center during the next business day. For large datasets, they need to be submitted via hard drive to CBER, for more information, refer to the FAQ above. | |||

# '''For more ESG-related questions, refer to the official FDA ESG FAQ site [https://www.fda.gov/industry/create-esg-account/frequently-asked-questions#_Q19 here]. If you have questions for CBER, please contact CBER ESUB at esubprep@fda.hhs.goc; if the questions are for CDER, please contact CDER ESUB at esub@fda.hhs.gov; if general ESG-related questions, please contact ESG Help Desk at esghelpdesk@fda.hhs.gov.''' | |||

=== Regulatory Applications and Use Cases === | |||

# '''For which types of regulatory submissions can BCOs be used?''' BCOs can be applied to the following types of regulatory submission: | |||

#* Biologics license applications (BLAs); | |||

#* Investigational new drug applications (INDs); | |||

#* New drug applications (NDAs); | |||

#* Abbreviated new drug applications (ANDAs). | |||

== Archived Pilot Project Related FAQs == | |||

=== BCO Test Submission === | |||

# '''How to submit eCTD?''' eCTD portion of the pilot (which contains BCO file) should be submitted via Test Electronic Submission Gateway (ESG) to both CBER and CDER. In order for both centers to receive the submission, sponsors would need to make a submission to each center via the ESG Test Gateway. | |||

## '''CDER:''' Participants should contact [[Mailto:ESUB-Testing@fda.hhs.gov|ESUB-Testing@fda.hhs.gov]] to request a sample application number to submit a BCO pilot submission. The subject line of the email should be “BCO Pilot”. Once the submission is sent through the ESG Test Gateway, the submitter will receive two gateway acknowledgements. The submitter will need to forward the second gateway acknowledgment which contains the “COR id” number to the ESUB-Testing team so they can locate the submission and notify the FDA Pilot point of contact. | |||

## '''CBER''': Participants should contact [[Mailto:ESUBPREP@fda.hhs.gov|ESUBPREP@fda.hhs.gov]] to request a sample application number to submit a BCO pilot submission. The ESUBPREP team should also be notified when the submission is inbound. The subject line of any email related to the pilot should contain “BCO Pilot.” Once the submission is sent through the ESG Test Gateway, the submitter will receive up to three acknowledgments. The submitter will need to forward the second gateway notification acknowledgment, containing the Core ID number, to the ESUBPREP team (also referencing “BCO Pilot” in the subject line) so the submission can be located and shared with the FDA Pilot point of contact. Please note that if a third acknowledgment is generated containing a rejection notification, it should be ignored by the submitter. | |||

# '''What is Electronic Submission Gateway (ESG)?''' The Electronic Submissions Gateway (ESG) is the FDA's primary way to receive electronic regulatory submissions. The Test ESG is in a pre-production environment and will be used for this pilot. | |||

# '''Are intermediate datasets/files required for the purpose of the pilot project?''' Currently, the intermediate files are not required to be submitted at the initial phase of the pilot project. | |||

=== Communication and Support === | |||

# '''How should sponsors contact the FDA regarding BioCompute Object submissions?''' We do have points of contact at both CDER and CBER centers. If you have general submission and review questions regarding BCO submissions, please contact [[Mailto:cber-edata@fda.hhs.gov|cber-edata@fda.hhs.gov]] if submitted to CBER; [[Mailto:edata@fda.hhs.gov|edata@fda.hhs.gov]] for CDER. | |||

# '''What should sponsors do if they have general questions about BioCompute Object submissions but do not have a submission in-house?''' If sponsors have general questions about BioCompute Object submissions and do not currently have a submission in-house, they can reach out to the email address industry.biologics@fda.hhs.gov managed by OCOD (Office of Computational and Data Sciences). The request will be triaged and directed to the appropriate individuals to provide assistance and address inquiries. | |||

==Publications== | |||

To explore our publications, please visit [https://hive.biochemistry.gwu.edu/publications this link]. | To explore our publications, please visit [https://hive.biochemistry.gwu.edu/publications this link]. | ||

Latest revision as of 12:58, 15 October 2025

Go back to BioCompute Objects.

General

1. How can I build a BioCompute Object (BCO)?

You have several options for building a BCO. You can use the standalone "builder" tool available here. Alternatively, if you're using a platform that supports BioCompute, you can utilize tools built into that platform such as DNAnexus/precisionFDA, Galaxy, or Seven Bridges/Cancer Genomics Cloud. You may also choose to build an output into your workflow as a JSON file conforming to the standard.

2. What are the minimum requirements for conformance with the BioCompute standard?

The minimum requirements include inputs, outputs, data transformation steps, environment details, individuals involved in pipeline development or execution, and a plain text description of the pipeline's objectives. The standard allows for much greater detail if needed, and is extensible to include substantially more. The standard is organized into 8 domains, 5 of which are required and 3 are optional.

3. How can I ensure my submission validates against the BioCompute schema?

Your submission should validate against the schema, which you can reference directly at the top level domain provided here.

4. Where can I find more information about the BioCompute standard and its organization? The official repository for the standard is open access and can be found here.

5. Can you provide an example of a completed BioCompute Object (BCO)?

Yes, you can view an example of a completed BCO here. You can explore both table and raw JSON views.

6. Where would information regarding data sources and standard operating procedures be? Which specific domain?

Data sources should be recorded as described by the input_subdomain in the “io_domain” and the input_list in the “description_domain”. Standard operating procedures and any other information about data transformations SHOULD be elaborated upon in the “usability_domain”.

7. How can a third-party access URIs in a BCO?

URIs can be directed to local paths. In these cases, the necessary files are shared with the parties that will require access. If it is a link to a public domain, it will be easily accessible for all.

8. What is a SHA1 Checksum?

A SHA-1 checksum, or Secure Hash Algorithm 1 checksum, is a fixed-size output (160 bits) generated from input data to uniquely identify and verify the integrity of files or documents. In BioCompute Objects (BCOs), it serves to ensure the unchanged state of computational workflows by comparing calculated and original checksums. This allows for accuracy in viewing and downloading BCOs.

9. How do I sign in with an ORCID/What is an ORCID?

ORCID stands for Open Researcher and Contributor ID, and is a free, unique identifier assigned to researchers, providing a standardized way to link researchers to their scholarly activities. To sign in with your ORCID, create an account at: https://orcid.org/. Using the credentials associated with your ORCID account you can log in to view and edit BCOs.

Pipeline Questions

1. Do pipeline steps have to represent sequentially run steps? How can you represent steps also run in parallel?

The standard does not mandate any particular numbering schema, but it’s best practice to pick the most logically intuitive numbering system. For example, a user may run a somatic SNV profiling step at the same time as a structural CNV analysis. So if in the example I mentioned, the alignment is step #2, then you might (arbitrarily) call the SNV profiling step #3, and the CNV analysis step #4. The fact that they pull from the output of the same step (#2) can easily be detected programmatically and represented in whatever way is suitable (e.g. graphically).

2. What is the nf-core plugin and how can I test it?

The nf-core plugin, designed to facilitate Nextflow workflows, is now available for testing. To enable the BCO (BioCompute Object) format within the plugin, follow these instructions:

- Ensure you have the latest version of the plugin installed.

- Add the following code snippets to your Nextflow configuration file:

plugins {

id 'nf-prov'

}

prov.enabled = true

prov {

formats {

bco {

file = 'bco.json'

overwrite = true

}

}

}

These settings will enable the BCO format and specify the output file as "bco.json". Ensure you include these snippets in your configuration file to activate the BCO format.

For any questions related to Nextflow environment, please ask here

Inputs and Outputs

1. What is the relationship and difference between input_list in description_domain and I/O Domain? Does input list in I/O domain contain all the input files of all the pipeline steps?

Yes. The Input Domain is for global inputs. The input_list/output_list in the pipeline_steps is specific to individual steps and is used to trace data flow if granular detail is needed. If not needed, a user can simply look at the IO domain for the overall view of the pipeline inputs.

2. What is the relationship and difference between output_list in description_domain and I/O Domain? Does output list in I/O domain contain all the output files of all the pipeline steps?

The Output Domain is for global outputs. The input_list/output_list in the pipeline_steps is specific to individual steps and is used to trace data flow if granular detail is needed. If not needed, a user can simply look at the IO domain for the overall view of the pipeline outputs.

3. There is an access_time property for uri, which is referenced by input_list, output_list, input_subdomain, and output_subdomain. What does access_time mean for output files? Aren’t output files generated by pipeline steps?

Yes they are, the timestamp is used for creation in those cases.

4. Can a script from the execution domain also be considered an input?

This is not usually the case, but it is possible for the script to be assessed as an input if it is used in the workflow to bring about an output.

Extensions

1. What is the role of extension_domain? How does it relate to other domains? Is it required in some pipeline steps? Or does it affect the execution? Or something else?

Extension Domain is never required, it is always optional. It is a user-defined space for capturing anything not already captured in the base BCO. To use it, one generates an extension schema (referenced in the extension_schema), and the associated fields within the BCO. For example, if a user wants to include a specialized ontology with definitions, it can be added here. It’s meant to capture anything idiosyncratic to that workflow not already captured in the standard and is very flexible.

2. How can BCOs be used for knowledgebases?

Using BioCompute’s pre-defined fields and standards, knowledgebases can generate a BioCompute Object (BCO) to document the metadata, quality control, and integration pipelines developed for different workflows. BCOs can be used to document each release. The structured data in a BCO makes it very easy to identify changes between releases (including changes to the curation/data processing pipeline, attribution to curators, or datasets processed), or revert to previous releases.

BCOs can be generated via a user-friendly instance of a BCO editor and can be maintained and shared through versioned, stable IDs stored under a single domain of that knowledgebase. BCOs not only provide complete transparency to their data submitters (authors, curators, other databases, etc.), collaborators, and users but also provide an efficient mechanism to reproduce the complete workflow through the information stored in different domains (such as description, execution, io, error, etc.) in the machine and human-readable formats.

The most common way of adapting BCOs for use in knowledgebases is by leveraging the Extension Domain. In this example, the Extension Domain is used for calling fields based on column headers. Note that the Extension Domain identifies its own schema, which defines the column headers and identifies them as required where appropriate. Because the JSON format of a BCO is human and machine-readable (and can be further adapted for any manner of display or editing through a user interface), BCOs are amendable to either manual or automatic curation processes, such as the curation process that populates those fields in the above example.

Prerequisites

1. What is the difference between software_prerequisites in execution_domain and prerequisites in the description_domain? Is the former global, while the latter only applies to one specific pipeline step?

Correct, Execution Domain is for anything related to the environment in which the pipeline was executed, and the Description Domain is specific to the software in those steps. So if I’ve written a shell script to run the pipeline, and in one step it includes myScript.py to comb through results and pick out elements of interest, myScript.py might be an Execution Domain prerequisite, and any packages or dependencies called from within the script are Description Domain level prerequisites. Alternatively, if I’m using the HIVE platform, any libraries needed to run HIVE are Execution Domain level.

BCO Scoring System

1. How is the score calculated?

The score is computed based on a few key factors:

- Usability Domain: The length of the usability_domain field contributed to the base score

- Field Length Modifier: A multiplier (1.2) is applied to the base score to account for field length

- Error Domain: If the error_domain exist and is inserted correctly, 5 points are added

- Parametric Objects: A multiplier (1.1) is applied to the score for each parametric object in the parametric_objects list

- Reviewer Objects: Up to 5 points are added, one for each correct reviewer_object

2. What happens if the usability_domain is missing?

If the usability_domain or other required fields are missing the BCO score is immediately set to 0, and the function returns the BCO instance without further calculations.

3. What is the purpose of the bco_score function

The bco_score function calculates and assigns a unique score to each BioCompute Object (BCO) based on specific criteria in its contents. The score is influenced by the presence of characteristics of certain fields like the usabiliy_domain, error_domain, parametric_objects, and reviewer_objects.

4. What is the purpose of the field_length_modifier and parametric_object_multiplier?

- field_length_modifier (1.2): This modifier adjusts the base score according to the length of the usability_domain field.

- parametric_object_multiplier (1.1): This multiplier increases the score based on the number of parametric objects present in the BCO, reflecting the complexity of the object.

5. What is the expected output of the bco_score function?

The bco_score function modifies the BCO instance by assigning a score attribute based on the criteria mentioned above, the updated BCO instance, with the score added, is then returned following the saving of the BCO draft.

6. How does the reviewer count affect the score?

For each reviewer_object present in the BCO (up to maximum of 5 reviewers), the score increases by 1 point. This incentivizes the inclusion of peer review and validation within the object.

7. What is the significance of entAliases in the convert_to_ldh function?

The entAliases field is a list that stores multiple identifiers for the BioCompute Object. These include the object_id, its full URL (entIri), and its entity type (entType), ensuring that the object can be referenced in different contexts.

8. How and Where does the score appear on the BCO Builder?

The score is calculated by the bco_score function and is displayed within the BioCompute Object (BCO) metadata section of the BCO Builder interface, following the saving of a BCO draft.

BCO for Knowledgebases

1. Can BCOs be used for curating databases?

Yes. BCOs have been used in this capacity, such as in the FDA’s ARGOS database of infectious diseases and the GlyGen database of glycosylation sites. The following recommendations are compiled from these use cases. Although these recommendations are built from practical experience, they may not address the needs of every database. Users are free to make modifications at their own discretion.

Using BioCompute’s pre-defined fields and standards, knowledgebases can generate a BioCompute Object (BCO) to document the metadata, quality control, and integration pipelines developed for different workflows. BCOs can be used to document each release. The structured data in a BCO makes it very easy to identify changes between releases (including changes to the curation/data processing pipeline, attribution to curators, or datasets processed), or revert to previous releases.

BCOs can be generated via a user-friendly instance of a BCO editor and can be maintained and shared through versioned, stable IDs stored under a single domain of that knowledgebase. BCOs not only provide complete transparency to their data submitters (authors, curators, other databases, etc.), collaborators, and users but also provide an efficient mechanism to reproduce the complete workflow through the information stored in different domains (such as description, execution, io, error, etc.) in the machine and human-readable formats.

The most common way of adapting BCOs for use in knowledgebases is by leveraging the Extension Domain. In this example, the Extension Domain is used for calling fields based on column headers. Note that the Extension Domain identifies its own schema, which defines the column headers and identifies them as required where appropriate. Because the JSON format of a BCO is human and machine-readable (and can be further adapted for any manner of display or editing through a user interface), BCOs are amendable to either manual or automatic curation processes, such as the curation process that populates those fields in the above example.

Saving and Publishing a BCO

1. Why is my BCO not saved after clicking SAVE?

The SAVE only saves the entry on the website but it's not saving to the server. For a new draft, after editing, go to Tools, first select a BCODB, then click on GET PREFIXES to choose a prefix, and lastly, click on SAVE PREFIX. For an existing draft, to save properly, click on SAVE first and then under Tools, select UPDATE DRAFT.

2. What are the minimal requirements for validation and publishing with the BioCompute Objects (BCO) standard?

A submission simply needs to validate against the schema. The minimal requirements include inputs, outputs, data transformation steps, environment details, person(s) who wrote or executed the pipeline, and a plain text description of the pipeline and its objectives.

BCO Validation and Error messages

The BCO Portal uses a JSON validator to validate the BCOs, and because of the error messages returned may be a little confusing. Below are some common validation results and an explanation of what they mean and how to address them.

1. "[description_domain][pipeline_steps][0][step_number]": "'1' is not of type 'integer'"

The step_number in the BCO JSON needs to be an INTEGER.

This means it can not be in quotes like this:

"step_number": "1",

Instead, it must be represented like this:

"step_number": 1,

You may not be able to see this difference in the COLOR-CODED view, and will have to look in the TREE VIEW JSON or RAW JSON VIEW.

Submitting BCOs to FDA

Submission Process and Requirements

- How should I submit a BCO with a regulatory submission? Both JSON (the original format of BCO) and plain text (".txt") documents are accepted by the FDA. BCO files can be included as a JSON file under Module 5.3.5.4 and submitted to the FDA as supporting documents in the Electronic Common Technical Document (eCTD) for the submission of bioinformatics workflow data to both CDER (Center for Drug Evaluation and Research) and CBER (Center for Biologics Evaluation and Research) for regulatory reviews. Human Foods Program (HFP; formerly known as the Center for Food Safety and Nutrition, or CFSAN) also accepts BCOs. Please check with your reviewer or review division for logistical details to submit a BCO. BCOs can contain links to files that are submitted via hard drive. File sharing via the FDA-HIVE portal is not currently available.

- What is the minimum content requirement for a Sample eCTD Submission to CDER? Module 1 and Module 5 (Module 5.3.5.4 especially where the BCO is located) are required. A cover letter (states the purpose of the submission and the intentional submission center) and FDA Form (1571 for IND and 356h for NDA/BLA/ANDA), and BCO file.

- Can I submit a BCO without data files? Yes, but if the sponsor is submitting a BCO without the data they should seek agreement with the review division prior to submission.

- Which center should the eCTD (containing BCO file(s)) be submitted to? Both CDER and CBER centers accept eCTD submissions and they both have the ability to review the submission files if requested.

- How to submit eCTD? eCTD (which contains BCO file) should be submitted via Test Electronic Submission Gateway (ESG) to both CBER and CDER. In order for both centers to receive the submission, sponsors would need to make a submission to each center via the ESG Gateway.

- Where should BCO files be placed within the eCTD? We recommend including BCO files as part of a study, referenced under a Study Tagging File, under Module 5.3.5.4 (Other Study Reports).

Dataset and File Submission Details

- How to include or indicate dataset information in the BCO and/or ESG submission? There are a few options to include the dataset information in the BCO or ESG submission: (Note: it is mandatory to include dataset information in the Cover Letter, however, if you would like to add more clarity, you may choose from the option 2-4 listed below)

- Include in the dataset information in the Cover Letter: State how the datasets are submitted (via ESG or hard drive), the tracking number, estimated delivery date. This option is mandatory for BCO submission to the FDA to help reviewers to track down related datasets.

- Globally, suggest to include datasets information in the Usability Domain and refer to the root of the hard drive, see example below:

- If you prefer to mention the file structure and names in each step, then the datasets information can be included in description domain, see example below:

- If you would like to use the file structure and names from an existing schema, you may enter the schema URL in the extension domain, using the Figure 1 from this paper as an example, the JSON schema defines the file structure and names

"$schema": "http://json-schema.org/draft-07/schema#", "$id": "http://json-schema.org/draft-07/schema#", "title": "Computational Biology Project Structure", "type": "object", "properties": { "level 1": { "type": "object", "properties": { "msms": { "type": "string", "description": "Main directory for project data and scripts." }, "level 2": { "type": "object", "properties": { "doc": { "type": "object", "description": "Project documentation", "properties": { "paper": { "type": "string", "description": "Documents related to the project." } } }, "data": { "type": "string", "description": "Directory for data files." }, "src": { "type": "string", "description": "Source code directory." }, "bin": { "type": "string", "description": "Executable files." }, "results": { "type": "object", "description": "Results directory with analysis outputs.", "properties": { "2009_01_15": { "type": "string", "description": "Data from analysis on 2009-01-15." }, "2009_01_23": { "type": "string", "description": "Data from analysis on 2009-01-23." } } } } } } }, "level 3": { "type": "object", "properties": { "2009_01_14": { "type": "object", "description": "Initial analysis on 2009-01-14.", "properties": { "yeast": { "type": "string", "description": "Yeast dataset." }, "worm": { "type": "string", "description": "Worm dataset." } } }, "2009_01_15": { "type": "object", "description": "Follow-up analysis and summary on 2009-01-15.", "properties": { "split1": { "type": "string", "description": "First data split." }, "split2": { "type": "string", "description": "Second data split." }, "split3": { "type": "string", "description": "Third data split." } } } } } }

- How to submit large I/O files to the FDA? Please refer to the FDA site for the definition of large I/O files. Large I/O files need to be sent on a hard drive to CBER, which FDA-HIVE is nested under CBER. All data will be uploaded/downloaded to HIVE. CDER reviewers will use the CBER HIVE to receive and review this type of data. Please use the following address for the hard drive: U.S. Food and Drug Administration Center for Biologics Evaluation and Research Document Control Center 10903 New Hampshire Avenue WO71, G112 Silver Spring, MD 20993-0002. Also, please add information of the dataset submission plan or details in the Cover Letter so that the reviewer knows it is coming and when to expect it.

- Are there any specific notes or memos that should be included with the hard drive? Yes, please ensure that the application number is attached to the hard drive for easier processing and identification. In addition, provide the shipping company name, transit number and expected delivery date when available to DCC (DCCEDR@fda.hhs.gov).

- Can I submit BCOs on a portable hard drive if requested by the FDA? BCOs can also be stored on a portable hard drive and referenced in the eCTD. This allows for easy access and verification of the BCO files during the review process. The hard drive submitted to HIVE (CBER), CDER would also have access to the data.

- How much data needs to be submitted? There is no minimum requirement; it depends on the goal of the submission and the scope of the submission.

- Is the software used required for submission? Software used is not required to be submitted. However, some divisions would want software details; this depends very much on where the submission goes.

Tools and Technical Details

- What is Electronic Submissions Gateway (ESG)? The Electronic Submissions Gateway (ESG) is the FDA's primary way to receive electronic regulatory submissions.

- What materials are required to register for an ESG account? FDA ESG provides two methods, WebTrader and AS2 for making submissions to the FDA. WebTrader is a web-based user interface to send documents and receive receipts and acknowledgments from the FDA with minimal technical expertise. To set up WebTrader, several preparatory steps are needed. First, prepare an electronic Letter of Non-Repudiation Agreement; Second, generate or obtain a free personal digital certificate. These two documents are needed during the account registration step. Other optional preparatory steps include preparing a guidance-compliant test submission and a load test submission. For more details, review the formal FDA documentation here. To register the WebTrader account, go to the ESG Account Management Portal at https://esgportal.fda.gov/. Then select "New Account Registration" and follow the prompts. Please refer to the ESG Account Management Portal User Guide for instructions. After account registration, users need to set up the local machine. Download the required WebTrader Client Installer (Note: only compatible with Windows machines). AS2 (System-to-System) allows industry partners to have the option to access the Gateway via system-to-system communication. It provides an automated connection to the FDA for submissions, receipts, and acknowledgments, generally used by sponsors that have a high volume of submissions. However, this system requires AS2-compliant software and technical expertise. For more information regarding AS2, refer to the Setting up an AS2 Account Checklist.

- How much data can ESG (Electronic Submission Gateway) handle? The maximum file size for a single file (non-folder) is 100 GB of uncompressed data. The maximum file size for a multi-file (folder) is 100 GB of uncompressed data that contains single files (non-folder) no larger than 6 GB of uncompressed data. ESG recommends that you send an email to [[1]] for all submissions over 10 GB of uncompressed data. The FDA recommends that submissions greater than 15 GB and less than 25 GB in size be sent overnight starting at 5PM EST to ensure receipt by the targeted FDA Center during the next business day. For large datasets, they need to be submitted via hard drive to CBER, for more information, refer to the FAQ above.

- For more ESG-related questions, refer to the official FDA ESG FAQ site here. If you have questions for CBER, please contact CBER ESUB at esubprep@fda.hhs.goc; if the questions are for CDER, please contact CDER ESUB at esub@fda.hhs.gov; if general ESG-related questions, please contact ESG Help Desk at esghelpdesk@fda.hhs.gov.

Regulatory Applications and Use Cases

- For which types of regulatory submissions can BCOs be used? BCOs can be applied to the following types of regulatory submission:

- Biologics license applications (BLAs);

- Investigational new drug applications (INDs);

- New drug applications (NDAs);

- Abbreviated new drug applications (ANDAs).

Archived Pilot Project Related FAQs

BCO Test Submission

- How to submit eCTD? eCTD portion of the pilot (which contains BCO file) should be submitted via Test Electronic Submission Gateway (ESG) to both CBER and CDER. In order for both centers to receive the submission, sponsors would need to make a submission to each center via the ESG Test Gateway.

- CDER: Participants should contact [[2]] to request a sample application number to submit a BCO pilot submission. The subject line of the email should be “BCO Pilot”. Once the submission is sent through the ESG Test Gateway, the submitter will receive two gateway acknowledgements. The submitter will need to forward the second gateway acknowledgment which contains the “COR id” number to the ESUB-Testing team so they can locate the submission and notify the FDA Pilot point of contact.

- CBER: Participants should contact [[3]] to request a sample application number to submit a BCO pilot submission. The ESUBPREP team should also be notified when the submission is inbound. The subject line of any email related to the pilot should contain “BCO Pilot.” Once the submission is sent through the ESG Test Gateway, the submitter will receive up to three acknowledgments. The submitter will need to forward the second gateway notification acknowledgment, containing the Core ID number, to the ESUBPREP team (also referencing “BCO Pilot” in the subject line) so the submission can be located and shared with the FDA Pilot point of contact. Please note that if a third acknowledgment is generated containing a rejection notification, it should be ignored by the submitter.

- What is Electronic Submission Gateway (ESG)? The Electronic Submissions Gateway (ESG) is the FDA's primary way to receive electronic regulatory submissions. The Test ESG is in a pre-production environment and will be used for this pilot.

- Are intermediate datasets/files required for the purpose of the pilot project? Currently, the intermediate files are not required to be submitted at the initial phase of the pilot project.

Communication and Support

- How should sponsors contact the FDA regarding BioCompute Object submissions? We do have points of contact at both CDER and CBER centers. If you have general submission and review questions regarding BCO submissions, please contact [[4]] if submitted to CBER; [[5]] for CDER.

- What should sponsors do if they have general questions about BioCompute Object submissions but do not have a submission in-house? If sponsors have general questions about BioCompute Object submissions and do not currently have a submission in-house, they can reach out to the email address industry.biologics@fda.hhs.gov managed by OCOD (Office of Computational and Data Sciences). The request will be triaged and directed to the appropriate individuals to provide assistance and address inquiries.

Publications

To explore our publications, please visit this link.